In the past, organizations had fears of delivering software faster and they mitigated those fears with excessive delays between testing and releasing in production. As these practices impacted negatively the success of many projects, industry and academia transformed those fears in sophisticated techniques to mitigate risks and safely deliver software.

However, as those companies evolved, other worries kept them awake: cyberattacks, infrastructure failures, and misconfigurations. Nowadays, Chaos Engineering is being used to mitigate these concerns [1], precisely, in the last Technology Radar, published by Thoughtworks on April 24th, Chaos Engineering moved from a much-talked to an accepted idea [2].

As organizations large and small begin to implement Chaos Engineering as an operational process, we are learning how to apply these techniques safely at scale. However, this approach is not for everyone, and to be effective and safe, it requires organizational support at scale, the design of an implementation plan, a change in the cultural mindset and the construction evaluation frameworks.

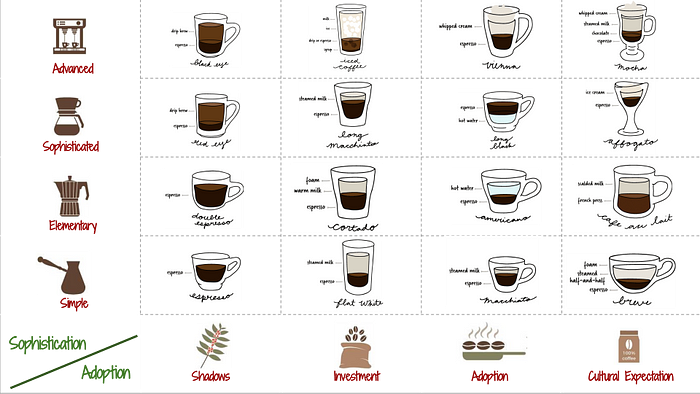

The Chaos Maturity Model (CMM) gives us a way to map out the state of a chaos program within an organization. According to [3] once you plot out your program on the map, you can set goals for where you want it to be, and compare it to the placement of other programs. The CMM is based on two metrics: sophistication and adoption, which I have represented in the illustration below.

Next, you can find a transcription of the description of each level taken from the Chaos Engineering book [4].

Sophistication

This metric can be described as elementary, simple, advanced, and sophisticated:

Elementary: Experiments are not run in production. The process is administered manually using forms. The results reflect system metrics, not business metrics and simple events are applied to the experimental group.

Simple: Few experiments are run with production. There is a self-service setup, automatic execution, manual monitoring and termination of experiments. The results reflect aggregated business metrics, however, the results are manually curated and aggregated.

Sophisticated: Experiments run in production. There is an automated setup, result analysis, and termination. The experimentation is integrated with continuous delivery and the business metrics are compared.

Advanced: Experiments run in each step of development and in every environment. Design, execution, and early termination are fully automated. Events include things like changing usage patterns and response or state mutation. Experiments have a dynamic scope and impact to find key inflection points. Revenue loss can be projected from experimental results.

Adoption

This metric can be described as in shadows, adoption, investment, and cultural expectation:

In the Shadows: in this stage the projects are unsanctioned. Few systems covered. There is low or no organizational awareness and early adopters infrequently perform chaos experimentation.

Investment: Experimentation is officially sanctioned. Part-time resources are dedicated to the practice. Multiple teams are interested and engaged and a few critical services infrequently perform chaos experiments.

Adoption: A team is dedicated to the practice of Chaos Engineering. Incident Response is integrated into the framework to create regression experiments. Most critical services practice regular chaos experimentation. Occasional experimental verifications are performed of incident responses and “game days”.

Cultural Expectation: All critical services have frequent chaos experiments. Most noncritical services frequently use chaos. Chaos experimentation is part of the engineer onboarding process. Participation is the default behavior for system components and justification is required for opting out.